Ian Jackson: How to use Rust on Debian (and Ubuntu, etc.)

tl;dr: Don t just

comments

comments

apt install rustc cargo. Either do that and make sure to use only Rust libraries from your distro (with the tiresome config runes below); or, just use rustup.

- Don t do the obvious thing; it s never what you want

- Option 1: WTF, no I don t want

curl bash - Option 2: Biting the

curl bashbullet - OMG what a mess

apt install rustc cargo, you will end up using Debian s compiler but upstream libraries, directly and uncurated from crates.io.

This is not what you want. There are about two reasonable things to do, depending on your preferences.

Q. Download and run whatever code from the internet?

The key question is this:

Are you comfortable downloading code, directly from hundreds of upstream Rust package maintainers, and running it ?

That s what cargo does. It s one of the main things it s for. Debian s cargo behaves, in this respect, just like upstream s. Let me say that again:

Debian s cargo promiscuously downloads code from crates.io just like upstream cargo.

So if you use Debian s cargo in the most obvious way, you are still downloading and running all those random libraries. The only thing you re avoiding downloading is the Rust compiler itself, which is precisely the part that is most carefully maintained, and of least concern.

Debian s cargo can even download from crates.io when you re building official Debian source packages written in Rust: if you run dpkg-buildpackage, the downloading is suppressed; but a plain cargo build will try to obtain and use dependencies from the upstream ecosystem. ( Happily , if you do this, it s quite likely to bail out early due to version mismatches, before actually downloading anything.)

Option 1: WTF, no I don t want curl bash

OK, but then you must limit yourself to libraries available within Debian. Each Debian release provides a curated set. It may or may not be sufficient for your needs. Many capable programs can be written using the packages in Debian.

But any upstream Rust project that you encounter is likely to be a pain to get working, unless their maintainers specifically intend to support this. (This is fairly rare, and the Rust tooling doesn t make it easy.)

To go with this plan, apt install rustc cargo and put this in your configuration, in $HOME/.cargo/config.toml:

[source.debian-packages]

directory = "/usr/share/cargo/registry"

[source.crates-io]

replace-with = "debian-packages"/usr/share for dependencies, rather than downloading them from crates.io. You must then install the librust-FOO-dev packages for each of your dependencies, with apt.

This will allow you to write your own program in Rust, and build it using cargo build.

Option 2: Biting the curl bash bullet

If you want to build software that isn t specifically targeted at Debian s Rust you will probably need to use packages from crates.io, not from Debian.

If you re doing to do that, there is little point not using rustup to get the latest compiler. rustup s install rune is alarming, but cargo will be doing exactly the same kind of thing, only worse (because it trusts many more people) and more hidden.

So in this case: do run the curl bash install rune.

Hopefully the Rust project you are trying to build have shipped a Cargo.lock; that contains hashes of all the dependencies that they last used and tested. If you run cargo build --locked, cargo will only use those versions, which are hopefully OK.

And you can run cargo audit to see if there are any reported vulnerabilities or problems. But you ll have to bootstrap this with cargo install --locked cargo-audit; cargo-audit is from the RUSTSEC folks who do care about these kind of things, so hopefully running their code (and their dependencies) is fine. Note the --locked which is needed because cargo s default behaviour is wrong.

Privilege separation

This approach is rather alarming. For my personal use, I wrote a privsep tool which allows me to run all this upstream Rust code as a separate user.

That tool is nailing-cargo. It s not particularly well productised, or tested, but it does work for at least one person besides me. You may wish to try it out, or consider alternative arrangements. Bug reports and patches welcome.

OMG what a mess

Indeed. There are large number of technical and social factors at play.

cargo itself is deeply troubling, both in principle, and in detail. I often find myself severely disappointed with its maintainers decisions. In mitigation, much of the wider Rust upstream community does takes this kind of thing very seriously, and often makes good choices. RUSTSEC is one of the results.

Debian s technical arrangements for Rust packaging are quite dysfunctional, too: IMO the scheme is based on fundamentally wrong design principles. But, the Debian Rust packaging team is dynamic, constantly working the update treadmills; and the team is generally welcoming and helpful.

Sadly last time I explored the possibility, the Debian Rust Team didn t have the appetite for more fundamental changes to the workflow (including, for example, changes to dependency version handling). Significant improvements to upstream cargo s approach seem unlikely, too; we can only hope that eventually someone might manage to supplant it.

edited 2024-03-21 21:49 to add a cut tag Last week we held our promised miniDebConf in Santa Fe City, Santa Fe province,

Argentina just across the river from Paran , where I have spent almost six

beautiful months I will never forget.

Last week we held our promised miniDebConf in Santa Fe City, Santa Fe province,

Argentina just across the river from Paran , where I have spent almost six

beautiful months I will never forget.

Closing arguments in the trial between various people and

Closing arguments in the trial between various people and

Like each month, have a look at the work funded by

Like each month, have a look at the work funded by  I like using one machine and setup for everything, from serious development work to hobby projects to managing my finances. This is very convenient, as often the lines between these are blurred. But it is also scary if I think of the large number of people who I have to trust to not want to extract all my personal data. Whenever I run a

I like using one machine and setup for everything, from serious development work to hobby projects to managing my finances. This is very convenient, as often the lines between these are blurred. But it is also scary if I think of the large number of people who I have to trust to not want to extract all my personal data. Whenever I run a

My brain is currently suffering from an overload caused by grading student

assignments.

In search of a somewhat productive way to procrastinate, I thought I

would share a small script I wrote sometime in 2023 to facilitate my grading

work.

I use Moodle for all the classes I teach and students use it to hand me out

their papers. When I'm ready to grade them, I download the ZIP archive Moodle

provides containing all their PDF files and comment them

My brain is currently suffering from an overload caused by grading student

assignments.

In search of a somewhat productive way to procrastinate, I thought I

would share a small script I wrote sometime in 2023 to facilitate my grading

work.

I use Moodle for all the classes I teach and students use it to hand me out

their papers. When I'm ready to grade them, I download the ZIP archive Moodle

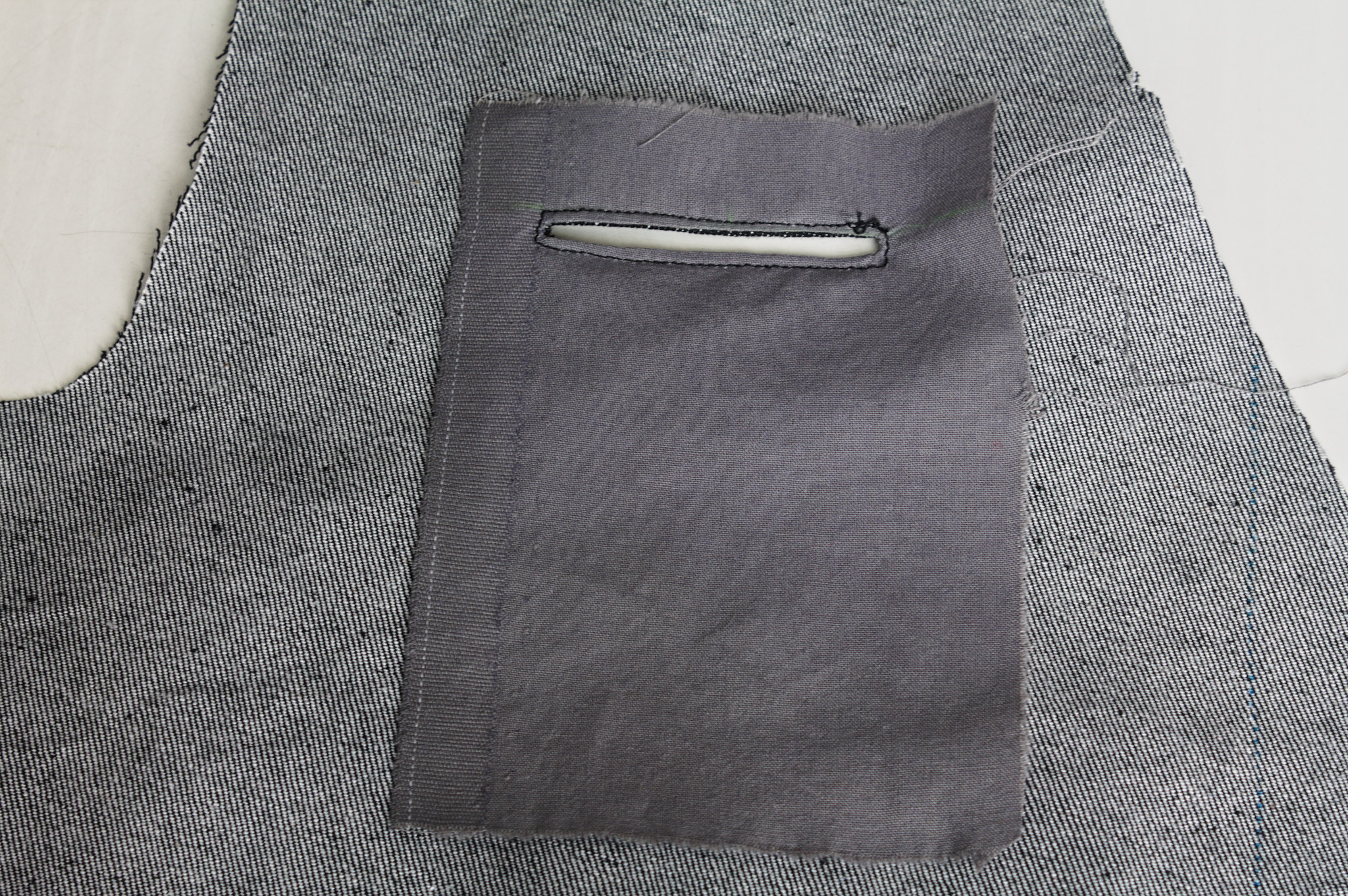

provides containing all their PDF files and comment them  I had finished sewing my jeans, I had a scant 50 cm of elastic denim

left.

Unrelated to that, I had just finished drafting a vest with Valentina,

after

I had finished sewing my jeans, I had a scant 50 cm of elastic denim

left.

Unrelated to that, I had just finished drafting a vest with Valentina,

after

The other thing that wasn t exactly as expected is the back: the pattern

splits the bottom part of the back to give it sufficient spring over

the hips . The book is probably published in 1892, but I had already

found when drafting the foundation skirt that its idea of hips

includes a bit of structure. The enough steel to carry a book or a cup

of tea kind of structure. I should have expected a lot of spring, and

indeed that s what I got.

To fit the bottom part of the back on the limited amount of fabric I had

to piece it, and I suspect that the flat felled seam in the center is

helping it sticking out; I don t think it s exactly bad, but it is

a peculiar look.

Also, I had to cut the back on the fold, rather than having a seam in

the middle and the grain on a different angle.

Anyway, my next waistcoat project is going to have a linen-cotton lining

and silk fashion fabric, and I d say that the pattern is good enough

that I can do a few small fixes and cut it directly in the lining, using

it as a second mockup.

As for the wrinkles, there is quite a bit, but it looks something that

will be solved by a bit of lightweight boning in the side seams and in

the front; it will be seen in the second mockup and the finished

waistcoat.

As for this one, it s definitely going to get some wear as is, in casual

contexts. Except. Well, it s a denim waistcoat, right? With a very

different cut from the get a denim jacket and rip out the sleeves , but

still a denim waistcoat, right? The kind that you cover in patches,

right?

The other thing that wasn t exactly as expected is the back: the pattern

splits the bottom part of the back to give it sufficient spring over

the hips . The book is probably published in 1892, but I had already

found when drafting the foundation skirt that its idea of hips

includes a bit of structure. The enough steel to carry a book or a cup

of tea kind of structure. I should have expected a lot of spring, and

indeed that s what I got.

To fit the bottom part of the back on the limited amount of fabric I had

to piece it, and I suspect that the flat felled seam in the center is

helping it sticking out; I don t think it s exactly bad, but it is

a peculiar look.

Also, I had to cut the back on the fold, rather than having a seam in

the middle and the grain on a different angle.

Anyway, my next waistcoat project is going to have a linen-cotton lining

and silk fashion fabric, and I d say that the pattern is good enough

that I can do a few small fixes and cut it directly in the lining, using

it as a second mockup.

As for the wrinkles, there is quite a bit, but it looks something that

will be solved by a bit of lightweight boning in the side seams and in

the front; it will be seen in the second mockup and the finished

waistcoat.

As for this one, it s definitely going to get some wear as is, in casual

contexts. Except. Well, it s a denim waistcoat, right? With a very

different cut from the get a denim jacket and rip out the sleeves , but

still a denim waistcoat, right? The kind that you cover in patches,

right?

I was working on what looked like a good pattern for a pair of

jeans-shaped trousers, and I knew I wasn t happy with 200-ish g/m

cotton-linen for general use outside of deep summer, but I didn t have a

source for proper denim either (I had been low-key looking for it for a

long time).

Then one day I looked at an article I had saved about fabric shops that

sell technical fabric and while window-shopping on one I found that they

had a decent selection of denim in a decent weight.

I decided it was a sign, and decided to buy the two heaviest denim they

had: a

I was working on what looked like a good pattern for a pair of

jeans-shaped trousers, and I knew I wasn t happy with 200-ish g/m

cotton-linen for general use outside of deep summer, but I didn t have a

source for proper denim either (I had been low-key looking for it for a

long time).

Then one day I looked at an article I had saved about fabric shops that

sell technical fabric and while window-shopping on one I found that they

had a decent selection of denim in a decent weight.

I decided it was a sign, and decided to buy the two heaviest denim they

had: a  The shop sent everything very quickly, the courier took their time (oh,

well) but eventually delivered my fabric on a sunny enough day that I

could wash it and start as soon as possible on the first pair.

The pattern I did in linen was a bit too fitting, but I was afraid I had

widened it a bit too much, so I did the first pair in the 100% cotton

denim. Sewing them took me about a week of early mornings and late

afternoons, excluding the weekend, and my worries proved false: they

were mostly just fine.

The only bit that could have been a bit better is the waistband, which

is a tiny bit too wide on the back: it s designed to be so for comfort,

but the next time I should pull the elastic a bit more, so that it stays

closer to the body.

The shop sent everything very quickly, the courier took their time (oh,

well) but eventually delivered my fabric on a sunny enough day that I

could wash it and start as soon as possible on the first pair.

The pattern I did in linen was a bit too fitting, but I was afraid I had

widened it a bit too much, so I did the first pair in the 100% cotton

denim. Sewing them took me about a week of early mornings and late

afternoons, excluding the weekend, and my worries proved false: they

were mostly just fine.

The only bit that could have been a bit better is the waistband, which

is a tiny bit too wide on the back: it s designed to be so for comfort,

but the next time I should pull the elastic a bit more, so that it stays

closer to the body.

I wore those jeans daily for the rest of the week, and confirmed that

they were indeed comfortable and the pattern was ok, so on the next

Monday I started to cut the elastic denim.

I decided to cut and sew two pairs, assembly-line style, using the

shaped waistband for one of them and the straight one for the other one.

I started working on them on a Monday, and on that week I had a couple

of days when I just couldn t, plus I completely skipped sewing on the

weekend, but on Tuesday the next week one pair was ready and could be

worn, and the other one only needed small finishes.

I wore those jeans daily for the rest of the week, and confirmed that

they were indeed comfortable and the pattern was ok, so on the next

Monday I started to cut the elastic denim.

I decided to cut and sew two pairs, assembly-line style, using the

shaped waistband for one of them and the straight one for the other one.

I started working on them on a Monday, and on that week I had a couple

of days when I just couldn t, plus I completely skipped sewing on the

weekend, but on Tuesday the next week one pair was ready and could be

worn, and the other one only needed small finishes.

And I have to say, I m really, really happy with the ones with a shaped

waistband in elastic denim, as they fit even better than the ones with a

straight waistband gathered with elastic. Cutting it requires more

fabric, but I think it s definitely worth it.

But it will be a problem for a later time: right now three pairs of

jeans are a good number to keep in rotation, and I hope I won t have to

sew jeans for myself for quite some time.

And I have to say, I m really, really happy with the ones with a shaped

waistband in elastic denim, as they fit even better than the ones with a

straight waistband gathered with elastic. Cutting it requires more

fabric, but I think it s definitely worth it.

But it will be a problem for a later time: right now three pairs of

jeans are a good number to keep in rotation, and I hope I won t have to

sew jeans for myself for quite some time.

I think that the leftovers of plain denim will be used for a skirt or

something else, and as for the leftovers of elastic denim, well, there

aren t a lot left, but what else I did with them is the topic for

another post.

Thanks to the fact that they are all slightly different, I ve started to

keep track of the times when I wash each pair, and hopefully I will be

able to see whether the elastic denim is significantly less durable than

the regular, or the added weight compensates for it somewhat. I m not

sure I ll manage to remember about saving the data until they get worn,

but if I do it will be interesting to know.

Oh, and I say I ve finished working on jeans and everything, but I still

haven t sewn the belt loops to the third pair. And I m currently wearing

them. It s a sewist tradition, or something. :D

I think that the leftovers of plain denim will be used for a skirt or

something else, and as for the leftovers of elastic denim, well, there

aren t a lot left, but what else I did with them is the topic for

another post.

Thanks to the fact that they are all slightly different, I ve started to

keep track of the times when I wash each pair, and hopefully I will be

able to see whether the elastic denim is significantly less durable than

the regular, or the added weight compensates for it somewhat. I m not

sure I ll manage to remember about saving the data until they get worn,

but if I do it will be interesting to know.

Oh, and I say I ve finished working on jeans and everything, but I still

haven t sewn the belt loops to the third pair. And I m currently wearing

them. It s a sewist tradition, or something. :D

A first maintenance of the still fairly new package

A first maintenance of the still fairly new package  This version mostly just updates to the newest releases of

This version mostly just updates to the newest releases of

Mee Goreng, a dish made of noodles in Malaysia.

Mee Goreng, a dish made of noodles in Malaysia.

Me at Petronas Towers.

Me at Petronas Towers.

Photo with Malaysians.

Photo with Malaysians.

Witch Wells AZ Sunset

Witch Wells AZ Sunset